To begin with, this guide explores how to build a multihomed EVPN-VXLAN fabric using Arista CloudVision Portal (CVP). There are two common design models: MLAG and non-MLAG. As a result, many engineers prefer the non-MLAG design engineers prefer the non-MLAG multihoming model due to better interoperability, reduced cabling costs, and adherence to open standards. We’ll use a custom Studio workflow in CVP to automate this configuration, since no built-in solution exists for this specific use case.

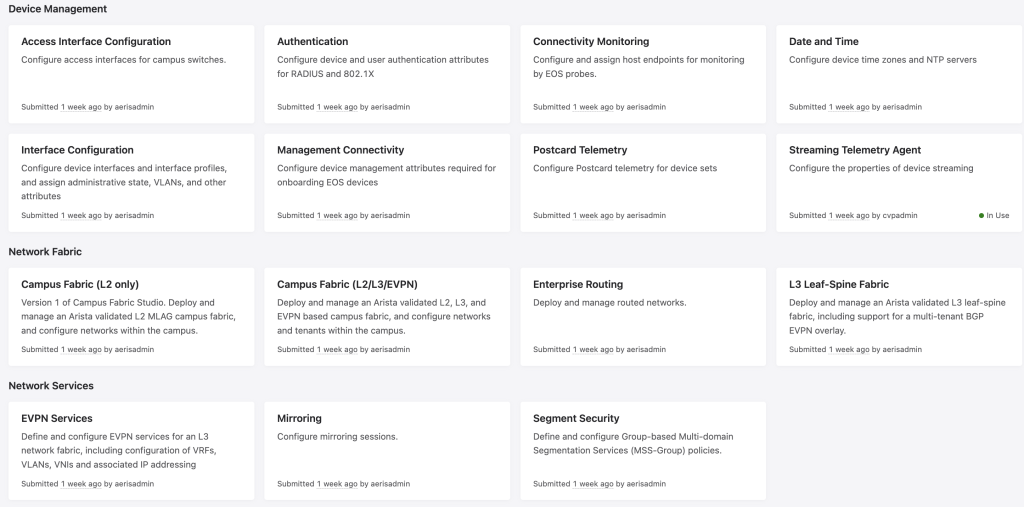

What is Studio?

Studio is a tool within CloudVision Portal that lets you automate switch configuration. It uses forms and Python/Mako scripts to generate and push configurations. You choose a template, fill in the required fields, and Studio applies the configuration to your devices.

When built-in Studios aren’t enough—like for Active-Active EVPN-VXLAN Multihoming—you can build a custom Studio to fit your needs.

Multihoming EVPN-VXLAN

Multihoming EVPN-VXLAN increases redundancy without using MLAG. It works with EVPN route-type 1 (Ethernet Auto-Discovery) and route-type 4 (Ethernet Segment). Leaf switches connect directly to multiple spines and operate independently. You create logical port-channels using Ethernet Segment Identifiers (ESI). Hence, the ESI is a 10-byte value that defines which links belong to the same multihomed segment.

Configuration Requirements for Active-Active EVPN-VXLAN Multihoming

To automate Active-Active Multihoming with EVPN-VXLAN, you need:

- Point-to-point interfaces with

/31subnets between leaf and spine switches - Loopback0 IPs on each device (used for BGP EVPN peering and VXLAN source IP)

- BGP AS numbers (leaves use different ASNs; spines can share one)

- ESI values on interfaces that form port-channels

- VLAN-to-VNI mapping for VXLAN and advertisement over EVPN

So, we’ll automate all of this using a custom Studio.

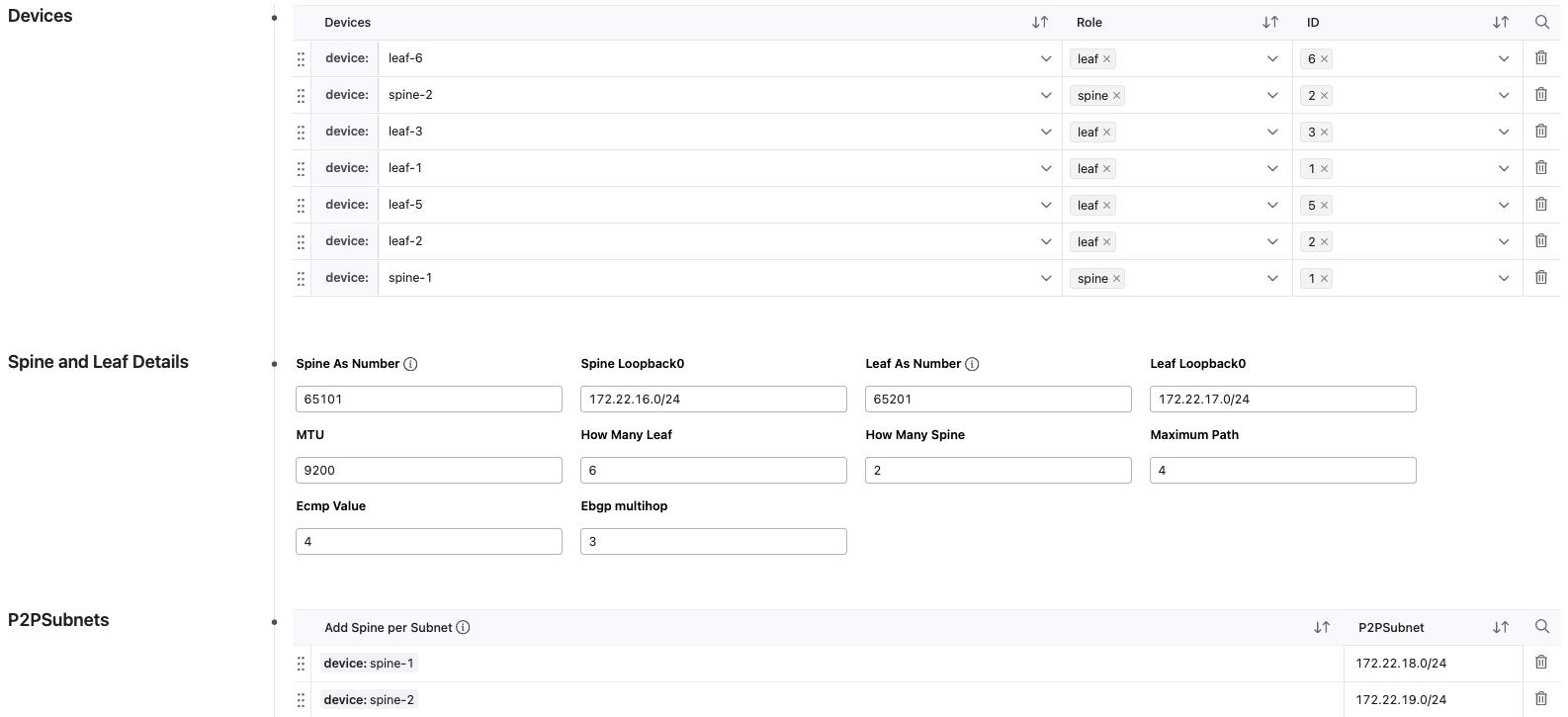

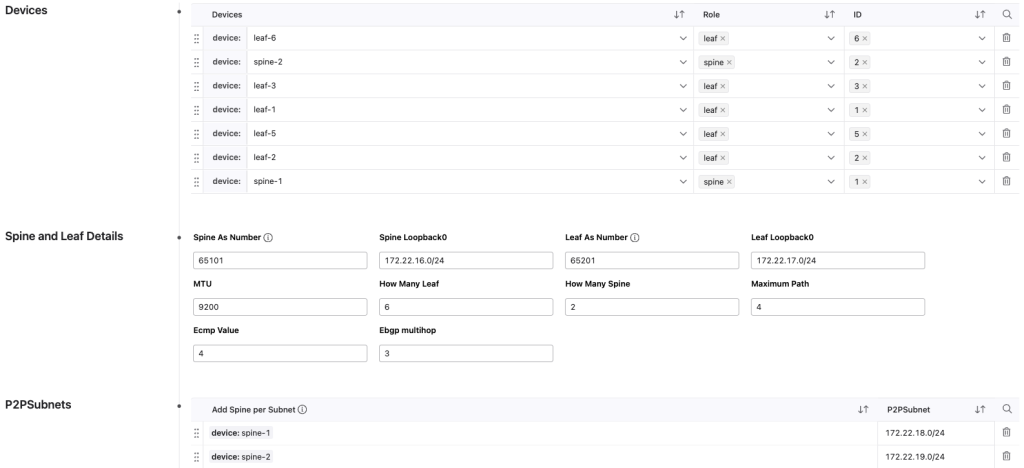

Design of the Custom Studio

The custom Studio form lets you define everything you need. Devices are listed automatically. So, you assign each one a role (leaf or spine) and ID. The form also accepts values for BGP AS numbers, loopback IPs, subnets, ESI values, and other parameters. When submitted, the Studio creates a configuration tailored to your topology.

The Code of the Form: Python and Mako

We use Python and Mako templates inside the Studio to dynamically generate the configuration. So, It processes interface information, assigns IP addresses, calculates loopbacks, and builds BGP neighbor relationships based on device roles.

<%

#GETTING CURRENT DEVICE INFO ( Module is context, Class is Context, creating device object, use its getDevice function)

device= ctx.getDevice()

device_int_list=list(device.getInterfaces().mapping.keys())

#GETTING Tag Label and values of current device, values are in list.

role_of_switch=device.getTags(ctx,'Role')

ID_of_switch=device.getTags(ctx,'ID')

role_value =[tag.value for tag in role_of_switch]

id_value=[id.value for id in ID_of_switch]

# this is a list, value will come from form by mako

p2PSubnet=[ ]

spine_int_subnet= " "

peer_int_list= []

spine_loopback_list= []

interface_index=0

def spliting(subnet):

ip_part, subnet_part = subnet.split('/')

octets = ip_part.split('.')

first_three_octet = ".".join(octets[:3])

last_octet = int(octets[3])

return first_three_octet,last_octet

def generating_IP(subnet,cidr,id_value,interface_index=None, role=None):

if isinstance(subnet, list) and role == "leaf":

subnet=subnet[interface_index]

first_three_octet,last_octet=spliting(subnet)

generated_last_octet=(2*int(id_value[0]))-1

ip=first_three_octet+'.'+str(generated_last_octet)+'/'+str(cidr)

return ip

if isinstance(subnet, list):

subnet=subnet[int(id_value[0]) - 1]

first_three_octet,last_octet=spliting(subnet)

generated_last_octet=int(last_octet + interface_index)

ip=first_three_octet+'.'+str(generated_last_octet)+'/'+str(cidr)

return ip

else:

first_three_octet,last_octet=spliting(subnet)

generated_last_octet=int(id_value[0])-1

ip=first_three_octet+'.'+str(generated_last_octet)+'/'+str(cidr)

return ip

def get_peer_info(peer_int_obj, ctx, device):

if peer_int_obj.getPeerDevice() and peer_int_obj.getPeerDevice().hostName:

peer_device = peer_int_obj.getPeerDevice()

role_of_peer = peer_device.getTags(ctx, 'Role')

ID_of_peer = peer_device.getTags(ctx, 'ID')

peer_role_value = [tag.value for tag in role_of_peer]

peer_id_value = [tag.value for tag in ID_of_peer]

description = device.hostName + '-' + peer_int_obj.getPeerDevice().hostName

else:

peer_role_value = [False]

description = False

peer_id_value =False

return peer_role_value, description, peer_id_value

%>

% if dc:

<% spine_loopback0=dc.resolve()["dcgroup"]["spineandleafDetails"]["spineLoopback0"] %>

<% spine_as=dc.resolve()["dcgroup"]["spineandleafDetails"]["spineAsNumber"] %>

<% leaf_loopback0=dc.resolve()["dcgroup"]["spineandleafDetails"]["leafLoopback0"] %>

<% leaf_as=dc.resolve()["dcgroup"]["spineandleafDetails"]["leafAsNumber"] %>

<% maximum_path=dc.resolve()["dcgroup"]["spineandleafDetails"]["maximumPath"] %>

<% ecmp=dc.resolve()["dcgroup"]["spineandleafDetails"]["ecmpValue"] %>

<% howManyLeaf=dc.resolve()["dcgroup"]["spineandleafDetails"]["howManyLeaf"] %>

<% howManySpine=dc.resolve()["dcgroup"]["spineandleafDetails"]["howManySpine"] %>

<% multihop=dc.resolve()["dcgroup"]["spineandleafDetails"]["ebgpMultihop"] %>

%for i in dc.resolve()["dcgroup"]["p2PSubnets"]:

<% p2PSubnet.append(i["p2PSubnet"]) %>

% endfor

% endif

%if role_value[0] == "spine":

% for i in device_int_list:

<%

peer_int_obj=device.getInterfaces().mapping.values().mapping[i]

peer_role_value, description,peer_id_value = get_peer_info(peer_int_obj, ctx, device)

%>

% if peer_role_value[0] == "leaf":

<%

interface_ip =generating_IP(p2PSubnet,31,id_value,interface_index)

interface_index +=2

%>

interface ${i}

mtu ${dc.resolve()["dcgroup"]["spineandleafDetails"]["mtu"]}

description ${description}

no switchport

ip address ${interface_ip}

!

% endif

%endfor

<% loopback_ip= generating_IP(spine_loopback0,32,id_value) %>

interface loopback0

ip address ${loopback_ip}

!

peer-filter LEAF-AS

match as-range ${leaf_as}-${int(leaf_as)+int(howManyLeaf)-1 } result accept

!

ip routing

!

router bgp ${spine_as}

router-id ${loopback_ip.split('/')[0]}

distance bgp 20 200 200

maximum-paths ${maximum_path} ecmp ${ecmp}

no bgp default ipv4-unicast

bgp listen range ${p2PSubnet[int(id_value[0]) - 1]} peer-group UNDERLAY peer-filter LEAF-AS

bgp listen range ${leaf_loopback0} peer-group OVERLAY peer-filter LEAF-AS

neighbor UNDERLAY peer group

neighbor OVERLAY peer group

neighbor OVERLAY update-source loopback 0

neighbor OVERLAY ebgp-multihop ${multihop}

neighbor OVERLAY send-community

neighbor OVERLAY bfd

!

address-family ipv4

neighbor UNDERLAY activate

redistribute connected

!

address-family evpn

neighbor OVERLAY activate

!

interface vxlan 1

vxlan source-interface loopback 0

%endif

%if role_value[0] == "leaf":

% for i in device_int_list:

<%

peer_int_obj=device.getInterfaces().mapping.values().mapping[i]

peer_role_value, description,peer_id_value = get_peer_info(peer_int_obj, ctx, device)

%>

% if peer_role_value[0] == "spine":

<%

interface_ip =generating_IP(p2PSubnet,31,id_value,interface_index,role="leaf")

first_three_octet,last_octet =spliting(interface_ip)

peer_int_ip = f"{first_three_octet}.{last_octet - 1}"

peer_int_list.append(peer_int_ip)

spine_loopback_ip= generating_IP(spine_loopback0,32,peer_id_value)

spine_loopback_list.append(spine_loopback_ip.split('/')[0])

interface_index +=1

%>

interface ${i}

mtu ${dc.resolve()["dcgroup"]["spineandleafDetails"]["mtu"]}

description ${description}

no switchport

ip address ${interface_ip}

!

% endif

%endfor

<%

loopback_ip= generating_IP(leaf_loopback0,32,id_value)

%>

interface loopback0

ip address ${loopback_ip}

!

ip routing

!

router bgp ${int(leaf_as)+int(id_value[0])-1}

router-id ${loopback_ip.split('/')[0]}

distance bgp 20 200 200

maximum-paths ${maximum_path} ecmp ${ecmp}

no bgp default ipv4-unicast

neighbor UNDERLAY peer group

neighbor UNDERLAY remote-as ${spine_as}

%for i in peer_int_list:

neighbor ${i} peer group UNDERLAY

%endfor

neighbor OVERLAY peer group

neighbor OVERLAY remote-as ${spine_as}

%for i in spine_loopback_list:

neighbor ${i} peer group OVERLAY

%endfor

neighbor OVERLAY update-source loopback 0

neighbor OVERLAY ebgp-multihop ${multihop}

neighbor OVERLAY send-community

neighbor OVERLAY bfd

!

address-family ipv4

neighbor UNDERLAY activate

redistribute connected

!

address-family evpn

neighbor OVERLAY activate

!

interface vxlan 1

vxlan source-interface loopback 0

%endif

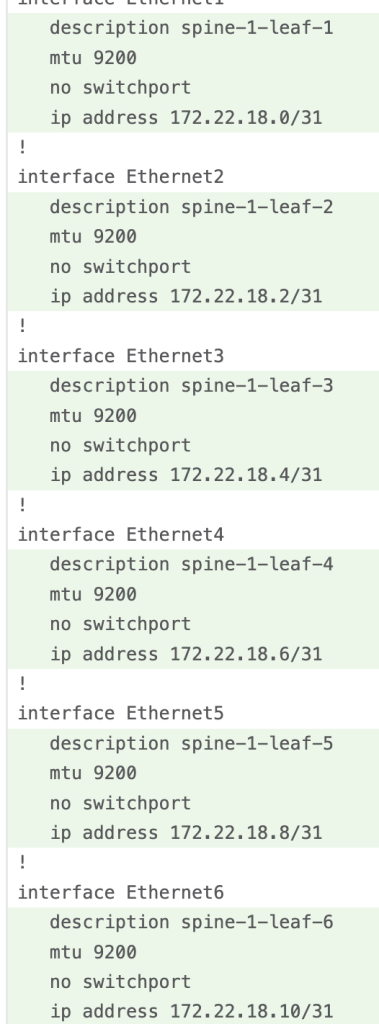

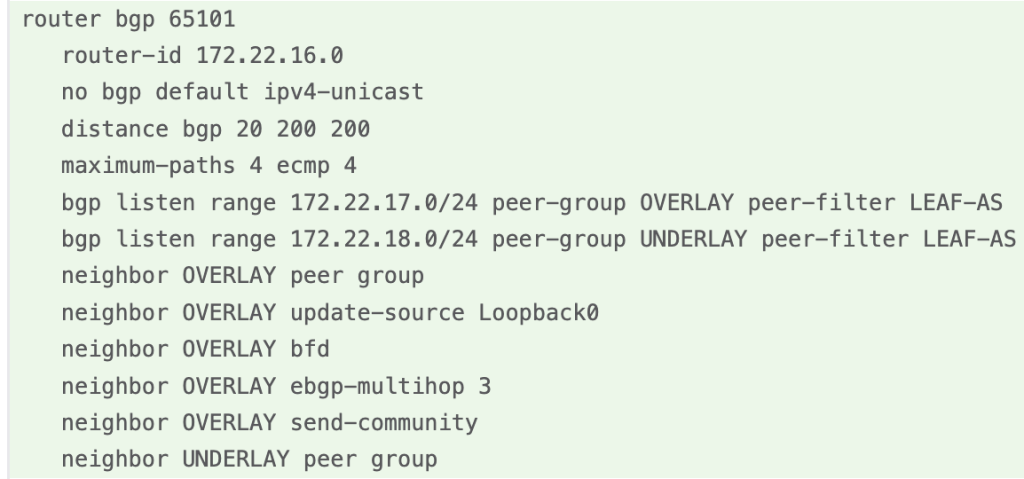

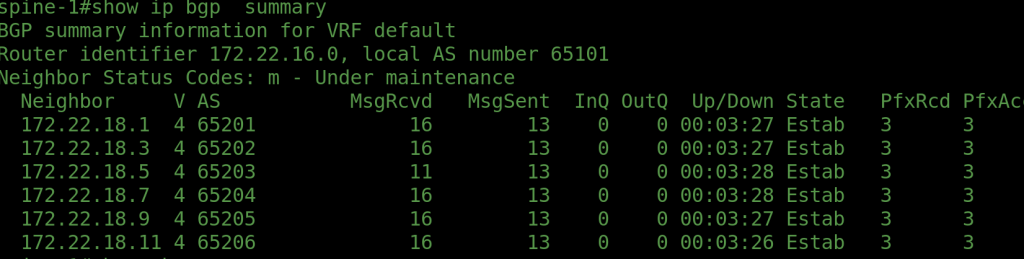

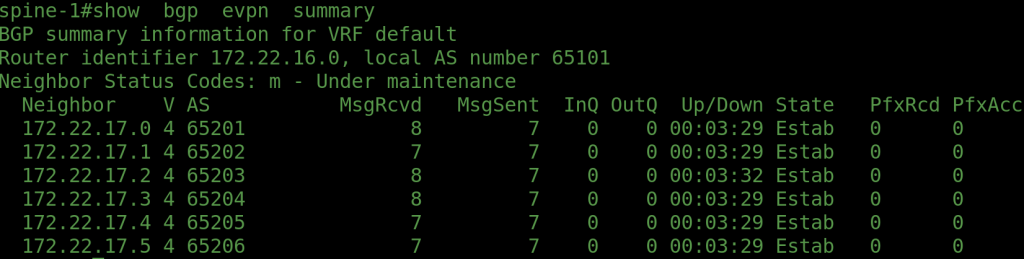

Configuration Output Example

After submitting the form and reviewing the workspace in CVP, the system creates full configurations for all devices. For example, below is a sample output for spine-1, part of a topology with 2 spines and 6 leaf switches. Hence, the config includes interface settings, loopbacks, BGP, and VXLAN tunnel setup—generated automatically by the custom Studio.